In the wake of the long-running massive industry consolidation in the Enterprise Software industry that reached its zenith with the acquisitions of Business Intelligence market leaders Hyperion, Cognos, and Business Objects in 2007, one could certainly have been forgiven for being less than optimistic about the prospects of innovation in the Analytics, Business Intelligence, and Performance Management markets. This is especially true given the dozens of innovative companies that each of these large best of breed vendors themselves had acquired before being acquired in turn. While the pace of innovation has slowed to a crawl as the large vendors are midway through digesting the former best of breed market leaders, thankfully for the health of the industry, nothing could be further from the truth in the market overall. This market has in fact shown itself to be very vibrant, with a resurgence of innovative offerings springing up in the wake of the fall of the largest best of breed vendors.

So what are the trends and where do I see the industry evolving to? Few of these are mutually exclusive, but in order to provide some categorization to the discussion, they have been broken down as follows:

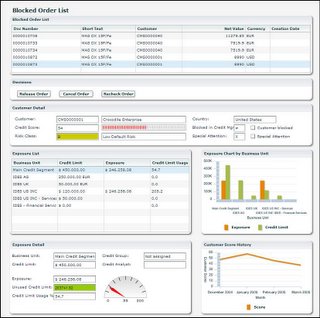

1. We will witness the emergence of packaged strategy-driven execution applications. As we discussed in Driven to Perform: Risk-Aware Performance Management From Strategy Through Execution (Nenshad Bardoliwalla, Stephanie Buscemi, and Denise Broady, New York, NY, Evolved Technologist Press, 2009), the end state for next-generation business applications is not merely to align the transactional execution processes contained in applications like ERP, CRM, and SCM with the strategic analytics of performance and risk management of the organization, but for those strategic analytics to literally drive execution. We called this “Strategy-Driven Execution”, the complete fusion of goals, initiatives, plans, forecasts, risks, controls, performance monitoring, and optimization with transactional processes. Visionary applications such as those provided by Workday and SalesForce.com with embedded real-time contextual reporting available directly in the application (not as a bolt-on), and Oracle’s entire Fusion suite layering Essbase and OBIEE capabilities tightly into the applications' logic, clearly portend the increasing fusion of analytic and transactional capability in the context of business processes and this will only increase.

2. The holy grail of the predictive, real-time enterprise will start to deliver on its promises. While classic analytic tools and applications have always done a good job of helping users understand what has happened and then analyze the root causes behind this performance, the value of this information is often stale before it reaches its intended audience. The holy grail of analytic technologies has always been the promise of being able to predict future outcomes by sensing and responding, with minimal latency between event and decision point. This has become manifested in the resurgence of interest in event-driven architectures that leverage a technology known as Complex Event Processing and predictive analytics. The predictive capabilities appear to be on their way to break out market acceptance IBM’s significant investment in setting up their Business Analytics and Optimization practice with 4000 dedicated consultants, combined with the massive product portfolio of the Cognos and recently acquired SPSS assets. Similarly, Complex Event Processing capabilities, a staple of extremely data-intensive, algorithmically-sophisticated industries such as financial services, have also become interesting to a number of other industries that can not deal with the amount of real-time data being generated and need to be able to capture value and decide instantaneously. Combining these capabilities will lead to new classes of applications for business management that were unimaginable a decade ago.

3. The industry will put reporting and slice-and-dice capabilities in their appropriate places and return to its decision-centric roots with a healthy dose of Web 2.0 style collaboration. It was clear to the pioneers of this industry, beginning as early as H.P. Luhn's brilliant visionary piece A Business Intelligence System from 1958, that the goal of these technologies was to support business decision-making activities, and we can trace the roots of modern analytics, business intelligence, and performance management to the decision-support notion of decades earlier. But somewhere along the way, business intelligence became synonymous with reporting and slicing-and-dicing, which is a metaphor that suits analysts, but not the average end-user. This has contributed to the paltry BI adoption rates of approximately 25% bandied about in the industry, despite the fact that investment in BI and its priority for companies has never been higher over the last five years. Making report production cheaper to the point of nearly being free, something SaaS BI is poised to do (see above), is still unlikely to improve this situation much. Instead, we will see a resurgence in collaborative decision-centric business intelligence offerings that make decisions the central focus of the offerings. From an operational perspective, this is certainly in evidence with the proliferation of rules-based approaches that can automate thousands of operational decisions with little human intervention. However, for more tactical and strategic decisions, mash-ups will allow users to assemble all of the relevant data for making a decision, social capabilities will allow users to discuss this relevant data to generate “crowdsourced” wisdom, and explicit decisions, along with automated inferences, will be captured and correlated against outcomes. This will allow decision-centric business intelligence to make recommendations within process contexts for what the appropriate next action should be, along with confidence intervals for the expected outcome, as well as being able to tell the user what the risks of her decisions are and how it will impact both the company’s and her own personal performance.

3. The industry will put reporting and slice-and-dice capabilities in their appropriate places and return to its decision-centric roots with a healthy dose of Web 2.0 style collaboration. It was clear to the pioneers of this industry, beginning as early as H.P. Luhn's brilliant visionary piece A Business Intelligence System from 1958, that the goal of these technologies was to support business decision-making activities, and we can trace the roots of modern analytics, business intelligence, and performance management to the decision-support notion of decades earlier. But somewhere along the way, business intelligence became synonymous with reporting and slicing-and-dicing, which is a metaphor that suits analysts, but not the average end-user. This has contributed to the paltry BI adoption rates of approximately 25% bandied about in the industry, despite the fact that investment in BI and its priority for companies has never been higher over the last five years. Making report production cheaper to the point of nearly being free, something SaaS BI is poised to do (see above), is still unlikely to improve this situation much. Instead, we will see a resurgence in collaborative decision-centric business intelligence offerings that make decisions the central focus of the offerings. From an operational perspective, this is certainly in evidence with the proliferation of rules-based approaches that can automate thousands of operational decisions with little human intervention. However, for more tactical and strategic decisions, mash-ups will allow users to assemble all of the relevant data for making a decision, social capabilities will allow users to discuss this relevant data to generate “crowdsourced” wisdom, and explicit decisions, along with automated inferences, will be captured and correlated against outcomes. This will allow decision-centric business intelligence to make recommendations within process contexts for what the appropriate next action should be, along with confidence intervals for the expected outcome, as well as being able to tell the user what the risks of her decisions are and how it will impact both the company’s and her own personal performance.

4. Performance, risk, and compliance management will continue to become unified in a process-based framework and make the leap out of the CFO’s office. The disciplines of performance, risk, and compliance management have been considered separate for a long time, but the walls are breaking down, as we documented thoroughly in Driven to Perform. Performance management begins with the goals that the organization is trying to achieve, and as risk management has evolved from its siloed roots into Enterprise Risk Management, it has become clear that risks must be identified and assessed in light of this same goal context. Similarly, in the wake of Sarbanes-Oxley, as compliance has become an extremely thorny and expensive issue for companies of all sizes, modern approaches suggest that compliance is ineffective when cast as a process of signing off on thousand of individual item checklists, but rather should be based on an organization’s risks. All three of these disciplines need to become unified in a process-based framework that allows for effective organizational governance. And while financial performance, risk, and compliance management are clearly the areas of most significant investment for most companies, it is clear that these concerns are now finally becoming enterprise-level plays that are escaping the confines of the Office of the CFO. We will continue to witness significant investment in sales and marketing performance management, as vendors like Right90 continuing to gain traction in improving the sales forecasting process and vendors like Varicent receive hefty $35 million venture rounds this year, no doubt thanks to experiencing over 100% year over year growth in the burgeoning Sales Performance Management category. My former Siebel colleague, Bruce Cleveland, now a partner at Interwest, makes the case for this market expansion of performance management into the front-office rather convincingly and has invested correspondingly.

5. SaaS / Cloud BI Tools will steal significant revenue from on-premise vendors but also fight for limited oxygen amongst themselves. From many accounts, this was the year that SaaS-based offerings hit the mainstream due to their numerous advantages over on-premise offerings, and this certainly was in evidence with the significant uptick in investment and market visibility of SaaS BI vendors. Although much was made of the folding of LucidEra, one of the original pioneers in the space, and while other vendors like BlinkLogic folded as well, vendors like Birst, PivotLink, Good Data, Indicee and others continue to announce wins at a fair clip along with innovations at a fraction of the cost of their on-premise brethren. From a functionality perspective, these tools offer great usability, some collaboration features, strong visualization capabilities, and an ease-of-use not seen with their on-premise equivalents whereby users are able to manage the system in a self-sufficient fashion devoid of the need for significant IT involvement. I have long argued that basic reporting and analysis is now a commodity, so there is little reason for any customer to invest in on-premise capabilities at the price/performance ratio that the SaaS vendors are offering (see BI SaaS Vendors Are Not Created Equal ) . We should thus expect to see continued dimunition of the on-premise vendors BI revenue streams as the SaaS BI value proposition goes mainstream, although it wouldn’t be surprising to see acquisitions by the large vendors to stem the tide. However, with so many small players in the market offering largely similar capabilities, the SaaS BI tools vendors may wind up starving themselves for oxygen as they put price pressure on each other to gain new customers. Only vendors whose offerings were designed from the beginning for cloud-scale architecture and thus whose marginal cost per additional user approaches zero will succeed in such a commodity pricing environment, although alternatively these vendors can pursue going upstream and try to compete in the enterprise, where the risks and rewards of competition are much higher. On the other hand, packaged SaaS BI Applications such as those offered by Host Analytics, Adaptive Planning, and new entrant Anaplan, while showing promising growth, have yet to mature to mainstream adoption, but are poised to do so in the coming years. As with all SaaS applications, addressing key integration and security concerns will remain crucial to driving adoption.

6. The undeniable arrival of the era of big data will lead to further proliferation in data management alternatives. While analytic-centric OLAP databases have been around for decades such as Oracle Express, Hyperion Essbase, and Microsoft Analysis Services, they have never held the same dominant market share from an applications consumption perspective that the RDBMS vendors have enjoyed over the last few decades. No matter what the application type, the RDBMS seemed to be the answer. However, we have witnessed an explosion of exciting data management offerings in the last few years that have reinvigorated the information management sector of the industry. The largest web players such as Google (BigTable),Yahoo (Hadoop), Amazon (Dynamo), Facebook (Cassandra) have built their own solutions to handle their own incredible data volumes, with the open source Hadoop ecosystem and commercial offerings like CloudEra leading the charge in broad awareness. Additionally, a whole new industry of DBMSs dedicated to Analytic workloads have sprung up, with flagship vendors like Netezza, Greenplum, Vertica, Aster Data, and the like with significant innovations in in-memory processing, exploiting parallelism, columnar storage options, and more. We already starting to see hybrid approaches between the Hadoop players and the ADBMS players, and even the largest vendors like Oracle with their Exadata offering are excited enough to make significant investments in this space. Additionally, significant opportunities to push application processing into the databases themselves are manifesting themselves. There has never been the plethora of choices available as new entrants to the market seem to crop up weekly. Visionary applications of this technology in areas like metereological forecasting and genomic sequencing with massive data volumes will become possible at hitherto unimaginable price points.

7. Advanced Visualization will continue to increase in depth and relevance to broader audiences. Visionary vendors like Tableau, QlikTech, and Spotfire (now Tibco) made their mark by providing significantly differentiated visualization capabilities compared with the trite bar and pie charts of most BI players' reporting tools. The latest advances in state-of-the-art UI technologies such as Microsoft’s SilverLight, Adobe Flex, and AJAX via frameworks like Google’s Web Toolkit augur the era of a revolution in state-of-the art visualization capabilities. With consumers broadly aware of the power of capabilities like Google Maps or the tactile manipulations possible on the iPhone, these capabilities will find their way into enterprise offerings at a rapid speed lest the gap between the consumer and enterprise realms become too large and lead to large scale adoption revolts as a younger generation begins to enter the workforce having never known the green screens of yore.

6. The undeniable arrival of the era of big data will lead to further proliferation in data management alternatives. While analytic-centric OLAP databases have been around for decades such as Oracle Express, Hyperion Essbase, and Microsoft Analysis Services, they have never held the same dominant market share from an applications consumption perspective that the RDBMS vendors have enjoyed over the last few decades. No matter what the application type, the RDBMS seemed to be the answer. However, we have witnessed an explosion of exciting data management offerings in the last few years that have reinvigorated the information management sector of the industry. The largest web players such as Google (BigTable),Yahoo (Hadoop), Amazon (Dynamo), Facebook (Cassandra) have built their own solutions to handle their own incredible data volumes, with the open source Hadoop ecosystem and commercial offerings like CloudEra leading the charge in broad awareness. Additionally, a whole new industry of DBMSs dedicated to Analytic workloads have sprung up, with flagship vendors like Netezza, Greenplum, Vertica, Aster Data, and the like with significant innovations in in-memory processing, exploiting parallelism, columnar storage options, and more. We already starting to see hybrid approaches between the Hadoop players and the ADBMS players, and even the largest vendors like Oracle with their Exadata offering are excited enough to make significant investments in this space. Additionally, significant opportunities to push application processing into the databases themselves are manifesting themselves. There has never been the plethora of choices available as new entrants to the market seem to crop up weekly. Visionary applications of this technology in areas like metereological forecasting and genomic sequencing with massive data volumes will become possible at hitherto unimaginable price points.

7. Advanced Visualization will continue to increase in depth and relevance to broader audiences. Visionary vendors like Tableau, QlikTech, and Spotfire (now Tibco) made their mark by providing significantly differentiated visualization capabilities compared with the trite bar and pie charts of most BI players' reporting tools. The latest advances in state-of-the-art UI technologies such as Microsoft’s SilverLight, Adobe Flex, and AJAX via frameworks like Google’s Web Toolkit augur the era of a revolution in state-of-the art visualization capabilities. With consumers broadly aware of the power of capabilities like Google Maps or the tactile manipulations possible on the iPhone, these capabilities will find their way into enterprise offerings at a rapid speed lest the gap between the consumer and enterprise realms become too large and lead to large scale adoption revolts as a younger generation begins to enter the workforce having never known the green screens of yore.

9. Data Quality, Data Integration, and Data Virtualization will merge with Master Data Management to form a unified Information Management Platform for structured and unstructured data. Data quality has been the bain of information systems for as long as they have existed, causing many an IT analyst to obsess over it, and data quality issues contribute to significant losses in system adoption, productivity, and time spent addressing them. Increasingly, data quality and data integration will be interlocked hand-in-hand to ensure the right, cleansed data is moved to downstream sources by attacking the problem at its root. Vendors including SAP BusinessObjects, SAS, Informatica, and Talend are all providing these capabilities to some degree today. Of course, with the amount of relevant data sources exploding in the enterprise and no way to integrate all the data sources into a single physical location while maintaining agility, vendors like Composite Software are providing data virtualization capabilities, whereby canonical information models can be overlayed on top information assets regardless of where they are located, capable of addressing the federation of batch, real-time and event data sources. These disparate data soures will need to be harmonized by strong Master Data Management capabilities, whereby the definitions of key entities in the enterprise like customers, suppliers, products, etc. can be used to provide semantic unification over these distributed data sources. Finally, structured, semi-structured, and unstructured information will all be able to be extracted, transformed, loaded, and queried from this ubiquitious information management platform by leveraging the capabilities of text analytics capabilities that continue to grow in importance and combining them with data virtualization capabilities.

10. Excel will continue to provide the dominant paradigm for end-user BI consumption. For Excel specifically, the number one analytic tool by far with a home on hundreds of millions of personal desktops, Microsoft has invested significantly in ensuring its continued viability as we move past its second decade of existence, and its adoption shows absolutely no sign of abating any time soon. With Excel 2010's arrival, this includes significantly enhanced charting capabilities, a server-based mode first released in 2007 called Excel Services, being a first-class citizen in SharePoint, and the biggest disruptor, the launch of PowerPivot, an extremely fast, scalable, in-memory analytic engine that can allow Excel analysis on millions of rows of data at sub-second speeds. While many vendors have tried in vain to displace Excel from the desktops of the business user for more than two decades, none will be any closer to succeeding any time soon. Microsoft will continue to make sure of that.

10. Excel will continue to provide the dominant paradigm for end-user BI consumption. For Excel specifically, the number one analytic tool by far with a home on hundreds of millions of personal desktops, Microsoft has invested significantly in ensuring its continued viability as we move past its second decade of existence, and its adoption shows absolutely no sign of abating any time soon. With Excel 2010's arrival, this includes significantly enhanced charting capabilities, a server-based mode first released in 2007 called Excel Services, being a first-class citizen in SharePoint, and the biggest disruptor, the launch of PowerPivot, an extremely fast, scalable, in-memory analytic engine that can allow Excel analysis on millions of rows of data at sub-second speeds. While many vendors have tried in vain to displace Excel from the desktops of the business user for more than two decades, none will be any closer to succeeding any time soon. Microsoft will continue to make sure of that.

And so ends my list of prognostications for Analytics, Business Intelligence, and Performance Management in 2010! What are yours? I welcome your feedback on this list and look forward to hearing your own views on the topic.